This comprehensive solution integrates seamlessly into the Microsoft ecosystem, providing a unified environment for users to harness the power of data and cloud scalability without worrying about any of the infrastructure needed to perform your big data tasks. Microsoft Fabric has now been in general availability from 15th of November 2023 and here at twoday we have been utilizing this promising technology with some of our customers even while it was in preview.

In our previous blog posts (in Finnish), we have told how Microsoft chose us exclusively in Finland for the private preview of Microsoft Fabric and how we have accumulated valuable experience with this new generation data platform since the beginning of 2023. Thanks to this, we are the most experienced Microsoft Fabric expert in Finland.

In this blog post, we'll explore the key features that make Microsoft Fabric a game-changer in the world of cloud data processing.

All-in-One Platform

At its core, Microsoft Fabric serves as an online data processing platform designed to cater to a spectrum of data-related tasks. From data engineering to analytics and machine learning workloads, it provides a unified space for data professionals to work efficiently and collaboratively.

Business intelligence

One of the most standout features of Microsoft Fabric is its seamless integration with PowerBI. This synergy ensures a smooth transition from data processing to visualization, allowing users to derive meaningful insights from their datasets. Notably, the platform's capability to read directly from Lakehouse Delta tables eliminates the necessity of integrating your data platform layer with business-ready datasets into Power BI, as it is inherently built into Fabric. This integration streamlines the reporting process, providing a cohesive end-to-end solution.

Storage

Microsoft Fabric comes equipped with a built-in data lake, which is called OneLake. As the name suggest it is a single storage inside your Fabric tenant where you can land your data, process it and save in the desired formats, for instance as Delta tables in your Lakehouse. OneLake is built on top of ADLS (Azure Data Lake Storage) Gen2. The idea behind it is to have one copy of data throughout your organization in order to avoid unnecessary duplication and movement. OneLake also assists in enabling swift retrieval of report-ready data to PowerBI. This ensures that users can access the information they need in real-time, facilitating quick and informed decision-making.

Data Engineering

Looking at some of the other features that Fabric offers, it doesn't shy away from handling big data processing. With the integration of Apache Spark, users can leverage the power of distributed computing to process large datasets efficiently and with the variety of different capacity offerings, you can select the best compute setup for existing workloads and scale up or down as needed. Whether in notebooks or as part of Spark job definitions, the platform provides flexibility in addressing big data challenges.

Orchestration

In terms of orchestrating your data engineering workloads, a built-in Data Factory sets Microsoft Fabric apart by providing users with the ability to create data pipelines and flows seamlessly. For anyone familiar with original version of Azure Data Factory the transition to pipeline creation and orchestration in Fabric should be as easy as it can get.

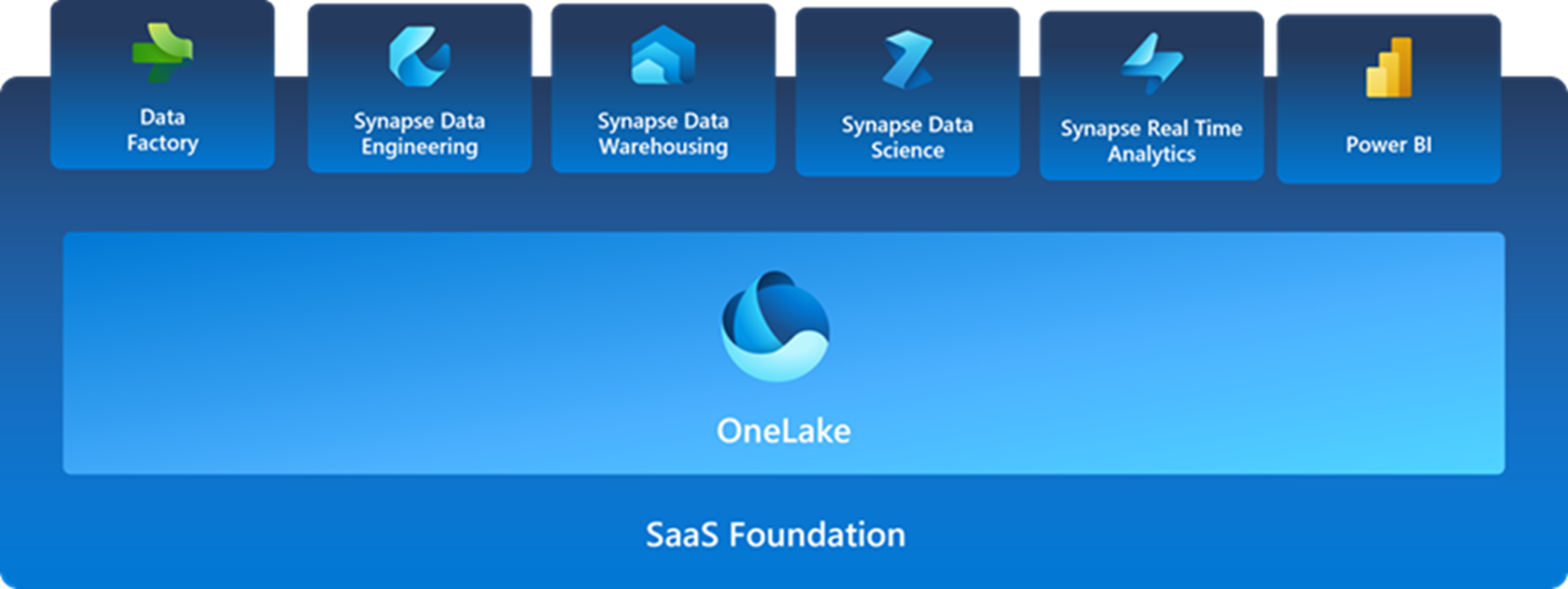

Since Microsoft is aiming to have a unified platform where different data personas can collaborate, there are different “experiences” available for different data specialists. Let’s look at some of the experiences that we have available in Fabric.

Figure 1: The shared SaaS foundation of Microsoft Fabric.

Data Factory experience

In the data factory experience, we can create data pipelines in a an almost identical fashion compared to Azure Data Factory. We have our Lookup, CopyData, ForEach and other activities that work in the same way. An example of a pipeline iterating through some configuration parameters retrieved by a notebook (Figure 2, Figure3):

-1.png?width=1235&height=274&name=MicrosoftTeams-image%20(22)-1.png)

Figure 2: A pipeline that uses a notebook activity to return some parameters for different entities and then iterate using each parameter set individually.

.png?width=1227&height=518&name=MicrosoftTeams-image%20(23).png)

Figure 3: Logic inside the ForEach activity that copies data from the source system, lands it to landing zone, processes the landed file and logs according to success/failure of different stages using the passed parameters.

Data Engineering experience

As previously mentioned, notebooks equipped with Apache Spark are one of the features that come with Microsoft Fabric. In the previous example we can see how they can be invoked in our pipelines and since it is possible to write custom code in them using a variety of languages (Python, Scala, R, SQL) it gives the developers great flexibility to achieve the desired goal.

.png?width=1105&height=1029&name=MicrosoftTeams-image%20(24).png)

Figure 4: Example of running PySpark code to query a log table to get summarized daily ingestion statistics.

Data Warehouse experience

The Synapse Data Warehouse is a 'traditional' data warehouse that supports the full transactional T-SQL capabilities like an enterprise data warehouse. The users of Fabric’s warehouse are fully in control of creating tables, loading, transforming, and querying your data in the data warehouse using either the Microsoft Fabric portal or T-SQL commands. Warehouse has full transactional DDL and DML support and is created by a customer.

A Warehouse is populated by one of the supported data ingestion methods such as the COPY INTO command, Pipelines, Dataflows, or cross database ingestion options such as CREATE TABLE AS SELECT (CTAS), INSERT… SELECT or SELECT INTO. It’s similarity to traditional data warehouses makes it easy for data professionals to adapt their existing knowledge while leveraging the benefits and scalability provided by Fabric.

.png?width=2068&height=874&name=MicrosoftTeams-image%20(25).png)

Figure 5: Ways of creating objects in Fabric’s Data Warehouse experience.

Data Science experience

Data Science users in Microsoft Fabric work on the same platform as business users, analysts and engineers. Data sharing and collaboration becomes more seamless across different roles as a result. Analysts can easily share Power BI reports and datasets, engineers can help investigate some of the problems that may be occurring from the source, and all of this is easily accessible to data science practitioners. The ease of collaboration across roles in Microsoft Fabric makes knowledge transferring and troubleshooting much easier for everyone.

With tools like PySpark/Python, SparklyR/R, notebooks can handle machine learning model training. ML algorithms and libraries can help train machine learning models. Library management tools can install these libraries and algorithms. Users have therefore the option to leverage a large variety of popular machine learning libraries to complete their ML model training in Microsoft Fabric.

Additionally, popular libraries like Scikit Learn can also develop models. MLflow experiments and runs can track the ML model training. Microsoft Fabric offers a built-in MLflow experience with which users can interact, to log experiments and models.

Conclusion

Microsoft Fabric emerges as a versatile and powerful solution for organizations grappling with diverse data-related tasks. From unstructured to structured data, from data engineering to analytics and machine learning, the platform's seamless integration with PowerBI, built-in data lake, T-SQL support for data warehousing workloads, Data Factory, and Apache Spark capabilities make it a comprehensive toolset for data professionals. As businesses continue to navigate the complexities of the data landscape, Microsoft Fabric stands out as a unified platform, unlocking the full potential of data for informed decision-making.